Robot Learning

What is Robot Learning?

Robot learning is a research field at the intersection of machine learning and robotics. It studies techniques allowing a robot to acquire novel skills or adapt to its environment through learning algorithms.

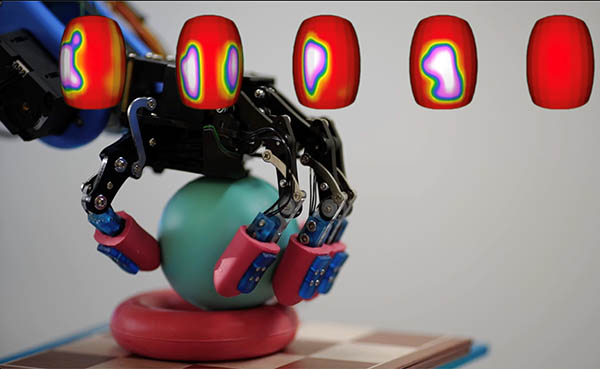

- Sensing: observe the physical world through multimodal senses

- Perception: acquiring knowledge from sensor data

- Action: act on the environment to execute task / acquire new observation

A key challenge in Robot Learning is to close the perception-action loop.

Applications of Robot Learning

Manipulation

Locomotion

Mobile Manipulation

When Should Robots Learn?

Robots should be designed to learn in situations where pre-existing knowledge or established protocols are insufficient or non-existent, requiring them to discover knowledge from data:

- High Environmental Uncertainty

- Significant Variation in Observations

- Lack of Reliable Priors

- Complex or Unstructured Environments

- Continuous Improvement

Learning is NOT the solution to every problem in robotics.

When the task can be modeled without knowledge from data, learning algorithm is not required (and learning algorithm tend to perform worse). We can also combine the learning system with classical techniques.

How to make robots learn?

These days, many robot learning methods are based on deep neural networks with various learning algorithms (supervised learning, unsupervised learning, reinforcement learning, etc.).

Multi-modal Sensory

LiDAR sensor

Stereo Depth sensor

RGBD camera, Microphone

IMU (Gyro/Acceleration/Barometer)

Tactile sensor

Joint Position/Velocity/Torque

Deep Learning

Basics

Backpropagation

Linear/Dense Layer

Convolution Layer

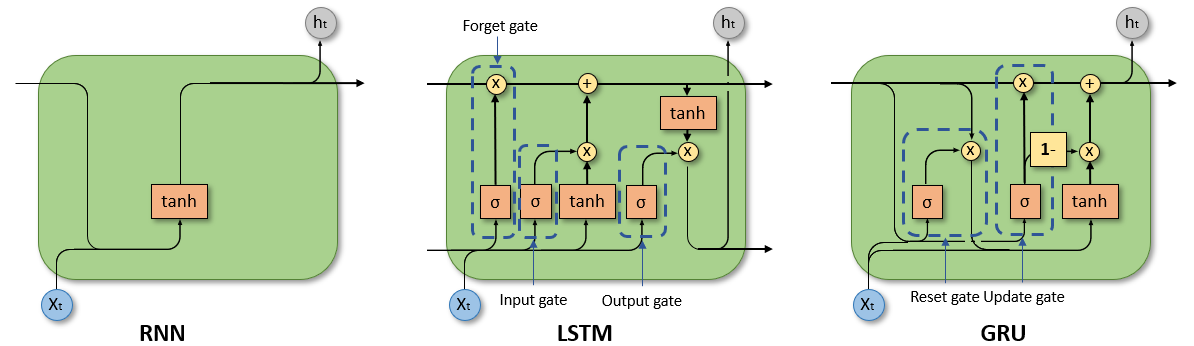

Recurrent Cells

Scaled Dot product Attention

An attention mechanism where the dot products are scaled down by

-

Motivated by the concern when the input is large, the softmax function may have an extremely small gradient, hard for efficient learning.

-

Calculate similarity from

-

Can be viewed as differentiable dictionary.

Multi-Head Attention (MHA)

A module for attention mechanisms which runs through an attention mechanism several times in parallel.

- The multiple attention heads allows for attending to parts of the sequence differently.

- When

- Only a small subset of heads appear to be important for the translation task. Especially the encoder self-attention heads, can be removed without seriously affecting performance [1].

Masked Multi-Head Attention

Masking of the unwanted tokens can be done by setting them to

Sample implementation:

qkv = to_qvk(x) # to_qvk = nn.Linear(dim, dim_head * heads * 3, bias=False)

q, k, v = tuple(rearrange(qkv, 'b t (d k h) -> k b h t d ', k=3, h=num_heads))

scaled_dot_prod = torch.einsum('b h i d , b h j d -> b h i j', q, k) * scale

scaled_dot_prod = scaled_dot_prod.masked_fill(mask, -np.inf)

attention = torch.softmax(scaled_dot_prod, dim=-1)

out = torch.einsum('b h i j , b h j d -> b h i d', attention, v)

out = rearrange(out, "b h t d -> b t (h d)")

Self-Attention vs Cross-Attention

Self-Attention: all of the

- Each position in the encoder can attend to all positions in the previous layer of the encoder.

Cross-Attention: the

- This allows every position in the decoder to attend over all positions in the input sequence.

- One way to realize cross-modal fusion.

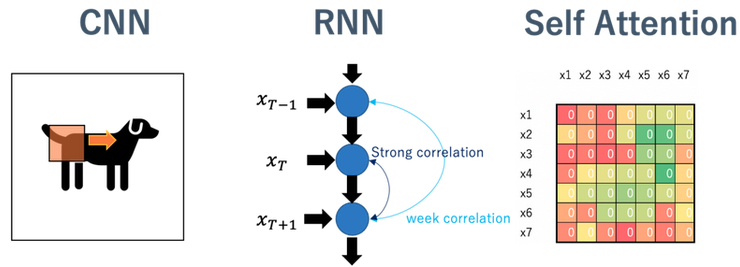

Inductive Bias

Inductive bias (IB) is the assumption(s) of data that the model holds [1].

- CNN: information in the data is locally aggregated. (strong IB)

- RNN: the data is highly correlated with the previous time. (strong IB)

- Self Attention: just correlating all features with each other. (weak IB)

Resources: Books

Deep Learning

by Ian Goodfellow, Yoshua Bengio, Aaron Courville

Modern Robotics: Mechanics, Planning, and Control

by Kevin M. Lynch, Frank C. Park

Resources: Online Materials

- CS391R (UT Austin) by Yuke Zhu: lecture slides