ViT arxiv

Applied pure transformer directly to sequences of image patches.

- An image patch is a

- The patchify stem is implemented by a stride-

Influence

Performance scales with dataset size and becomes a new de facto for image backbone.

Representing Image as Patches

For an input image

Sample implementation:

from einops import rearrange

proj = nn.Linear((patch_size**2)*channels, dim)

x_p = rearrange(img, 'b c (h p) (w p) -> b (h w) (p p c)', p = patch_size)

embedding = proj(x_p)

or equivalently:

conv = nn.Conv2d(channels, dim, kernel_size=patch_size, stride=patch_size)

embedding = rearrange(conv(img), 'b c h w -> b (h w) c')

ViT vs ResNet arxiv

-

MHSAs and Convs exhibit opposite behaviors. MHSAs are low-pass filters, but Convs are high-pass filters.

-

MHSAs improve not only accuracy but also generalization by flattening the loss landscapes.

ViT vs ResNet

ViT models are less effective in capturing the high-frequency components (related to local structures) of images than CNN models [1].

For ViTs to capture high-frequency components:

ViT vs ResNet

Robustness to input perturbations:

-

ResNet: noise has a high frequency component and localized structure [1]

-

ViT: relatively low frequency component and a large structure (The border is clearly visible in the

- When pre-trained with a sufficient amount of data, ViT are at least as robust as the ResNet counterparts on a broad range of perturbations [2].

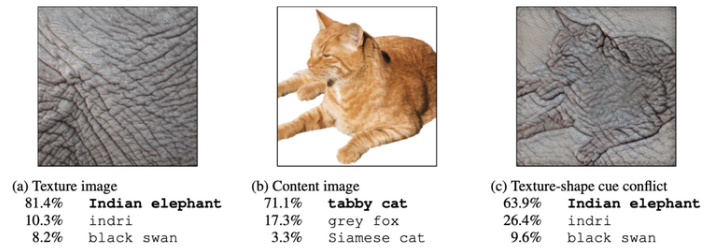

ViT vs ResNet arxiv

Is dicision based on texture or shape?

- ResNet: relies on texture rather than shape [1]

- ViT: little more robust to texture perturbation

- Human: much robust to texture perturbation

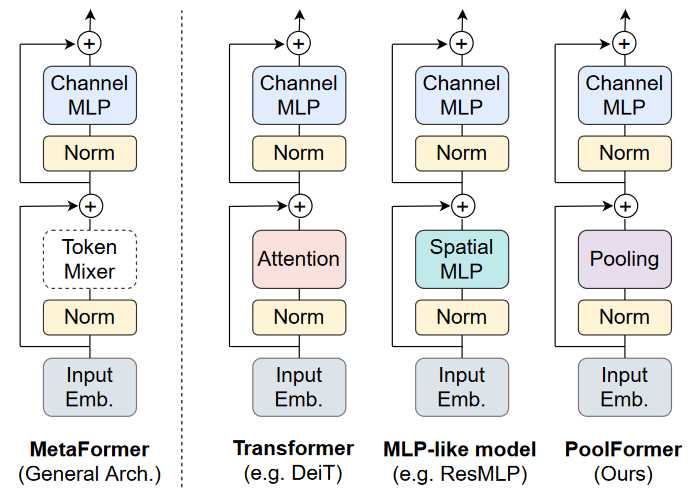

Do We Really Need Attention? arxiv

It turns out that it is not "Attention is All You Need".

- As long as the tokens can be mixed, MetaFormer architecture can achieve the similar performance as the Transformer.

- Meta architecture of transformer layer can be viewed as a special case of ResNet layer with following componets:

- Token mixers (self-attention, etc.)

- Position Encoding

- Channel MLP (1x1 convolution)

- Normalization (LayerNorm, etc.)

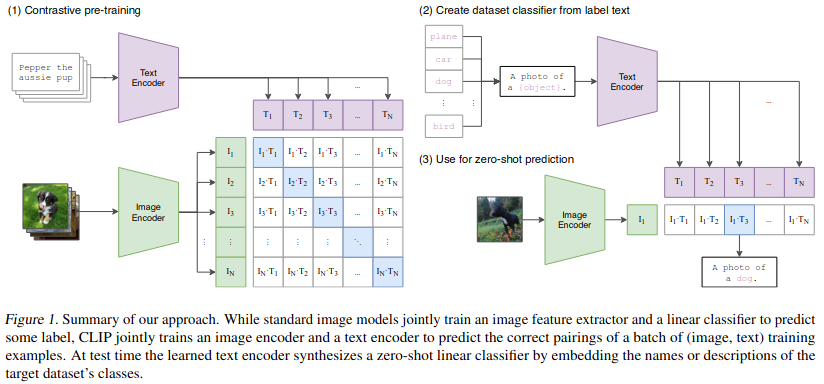

CLIP arxiv github

Learning directly from raw text about images leverages a broader source of supervision compared to using a fixed set of predetermined object categories. CLIP introduced a simple yet effective contrastive objective to learn a vision-language embedding space, where similar concepts are pulled together and different concepts are pushed apart.

CLIP arxiv github

Sample implementation:

# extract feature representations of each modality

I_f = image_encoder(I) #[n, d_i]

T_f = text_encoder(T) #[n, d_t]

# joint multimodal embedding [n, d_e]

I_e = l2_normalize(np.dot(I_f, W_i), axis=1)

T_e = l2_normalize(np.dot(T_f, W_t), axis=1)

# scaled pairwise cosine similarities [n, n]

logits = np.dot(I_e, T_e.T) * np.exp(t)

# symmetric loss function

labels = np.arange(n)

loss_i = cross_entropy_loss(logits, labels, axis=0)

loss_t = cross_entropy_loss(logits, labels, axis=1)

loss = (loss_i + loss_t)/2

Object Detection

YOLO

DETR

Segmentation

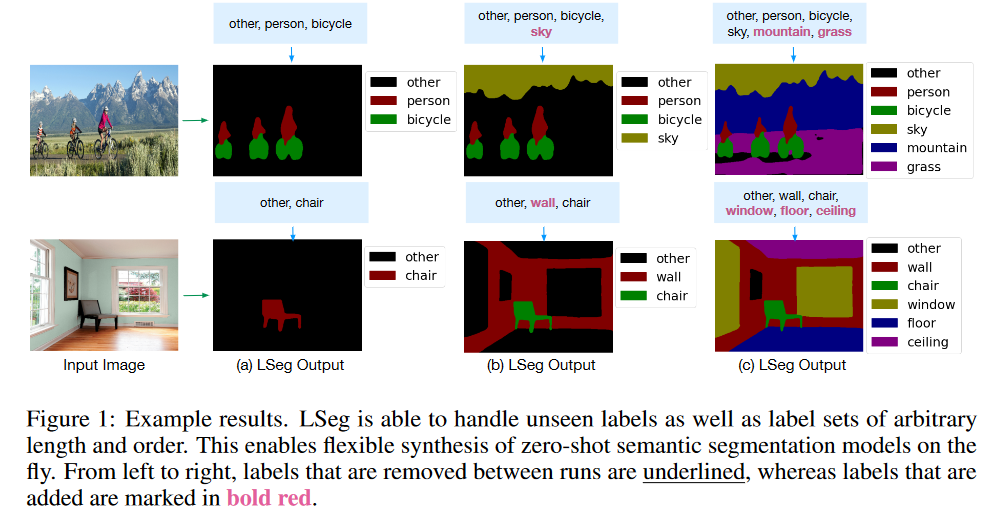

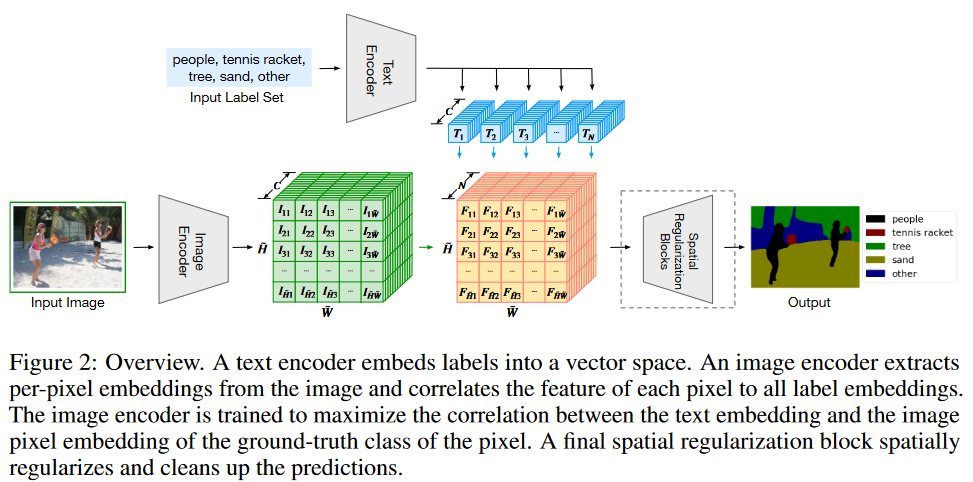

LSeg arxiv github

The text embeddings provide a flexible label representation and help generalize to previously unseen categories at test time, without retraining or even requiring a single additional training sample.

LSeg arxiv github

Language-driven Semantic Segmentation (LSeg) embeds text labels and image pixels into a common space, and assigns the closest label to each pixel.

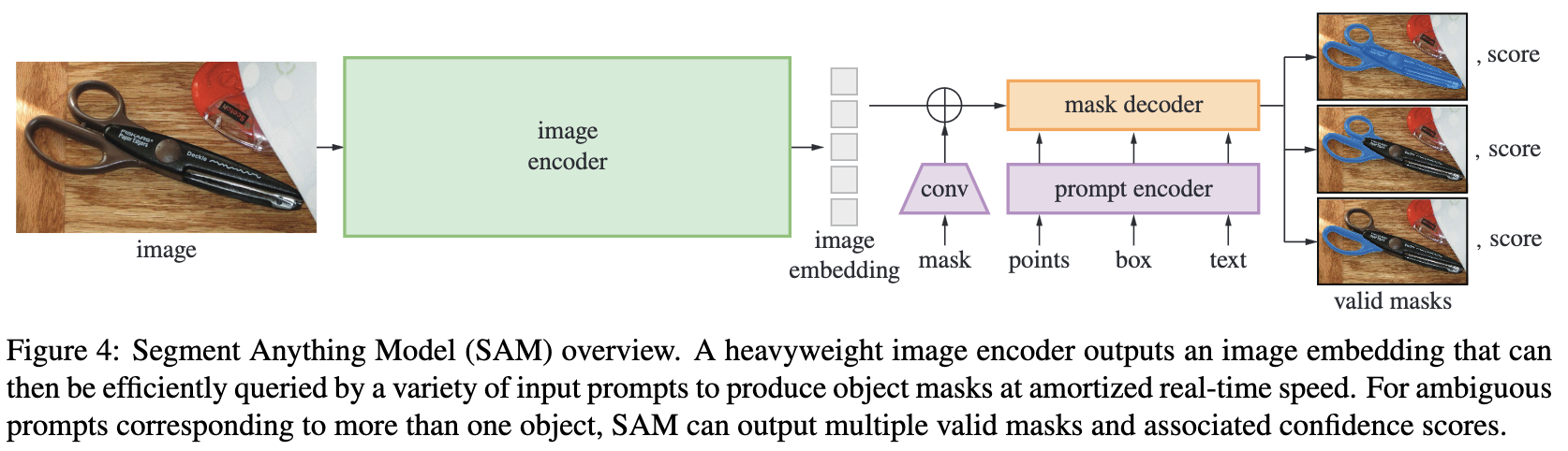

SAM arxiv