NLMap (4/4)

To complete a task specified by human instruction, the robot will query the scene representation for relevant information.

- parsing natural language instruction into a list of relevant object names

- using the names as keys to query object locations and availability.

- generating executable options based on what’s found in the scene, then plan and execute as instructed.

CLIP-Nav (1/3)

CLIP-Nav [1] examines CLIP’s capability in making sequential navigational decisions, and study how it influences the path that an agent takes.

- Instruction Breakdown: Decompose coarse-grained instructions into keyphrases using LLMs.

- Vision-Language Grounding: Ground keyphrases in the environment using CLIP.

- Zero-Shot Navigation: Utilize the CLIP scores to make navigational decisions.

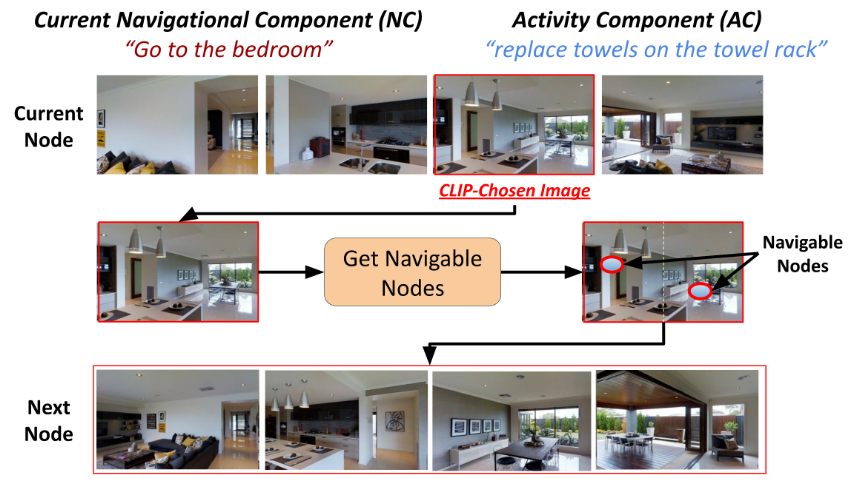

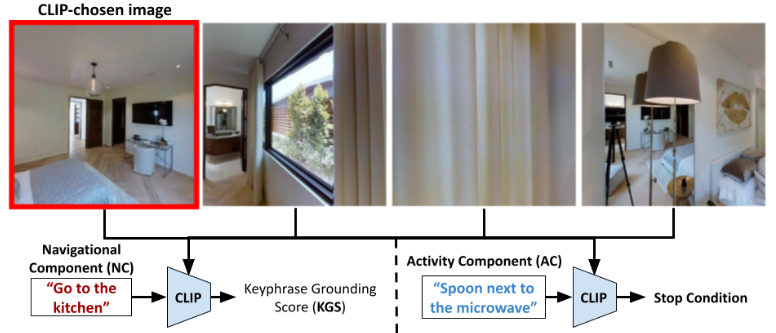

CLIP-Nav (2/3)

- Ground the NC on all the split images to obtain Keyphrase Grounding Scores (KGS). The CLIP-chosen image represents the one with the highest KGS, which drives the navigation algorithms.

- Ground the AC and use the grounding score to determine if the agent has reached the target location (stop condition).

CLIP-Nav (3/3)

At each time step:

- split the panorama into 4 images, and obtain the CLIP-chosen image

- obtain adjacent navigable nodes visible from this image using the Matterport Simulator, and choose the closest node.

This is done iteratively till the Stop Condition is reached.